Digital potato

2021-08-26

Attention conservation notice: Autobiographical scribbles about high school coding attempts with little relevance for anyone.

holy_shit_I_can_see_a_six.png

Created: 2016-05-19

The grayscale potato looking image you see on this page is an artifact of my early experiments with neural networks in high school as I was going through Michael Nielsen's Neural Networks and Deep Learning. In that book, Nielsen explains how to implement a basic feedforward neural network to classify MNIST digits from the ground up in Numpy, relying only on basic matrix operations. He derives formulae for backprop carefully, slowly enough that high school me could kinda vaguely understand them.

I noticed that gradient descent could allow you to not only optimize the parameters to minimize a cost function, but it could also allow you to optimize a certain input to maximize a certain neural network output. So, you could make the model imagine something. Obviously I was tripping on deep dream posts I saw on r/machinelearning at the time, so it's hard for me to say how much of this was innate creativity and how much of it was just inspired by much fancier work others were doing.

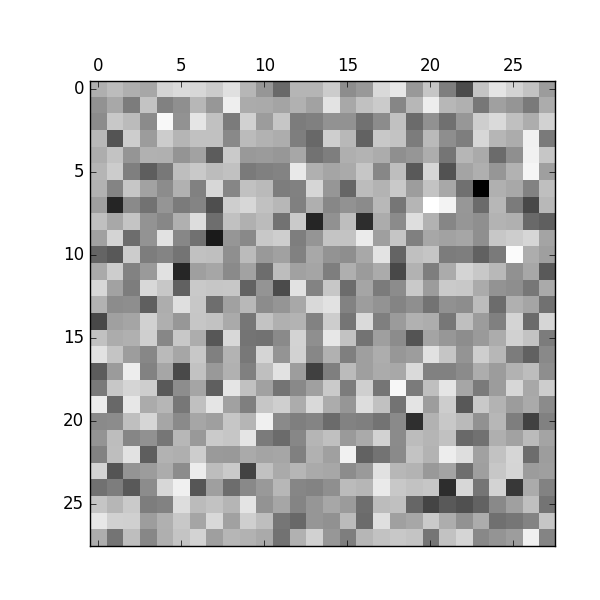

So, I did that. I took a trained MNIST classifier network and optimized random noise to produce an input image of "6". The result was... puzzling.

I was very confused. This does not look like a six at all.

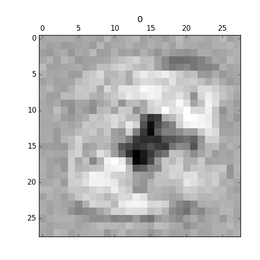

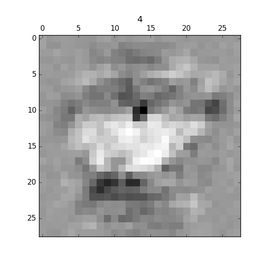

I thought I might have made a mistake, but I wasn't sure where. I decided to add a bit of Gaussian blur to the process, so that the pixel intensities wouldn't jump so sharply. Then, all of a sudden...

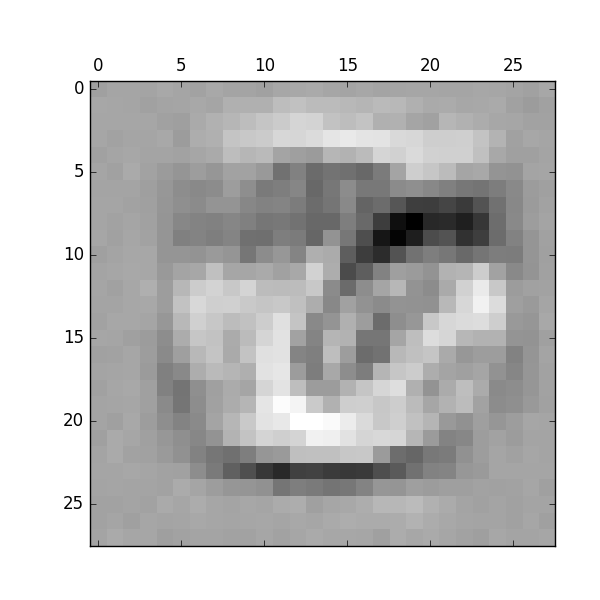

Whoa boi, wtf. It kind of does have six-like features. Black means "for sure there is no ink here", while white means "yeah there should be some ink here". You can clearly see where the 6 has the little hook that distinguishes it from 8.

I was suddenly quite freaked out - I just made the neural network imagine something, but clearly its imagination was not human. I was really into all kinds of singularity hype at the time, having read Bostrom's Superintelligence not long before, so I felt quite a rush playing around with this little computer brain.

That was the point when I really got into neural networks. In a way, without being overly dramatic, I think Nielsen's book changed my life; ever since I read it, I was doomed to focus my development at least in large part on these little machine learning bois.

Of course, now I know nothing is as simple as it seems, neural networks can't solve everything, adding more layers isn't sufficient to save the world, the singularity is most likely not coming any time soon, etc. In any case, I'm still rather sentimental about these little funky plots.

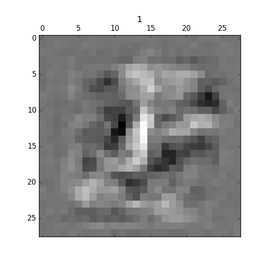

Bonus, a gallery of digits I plotted based on those models

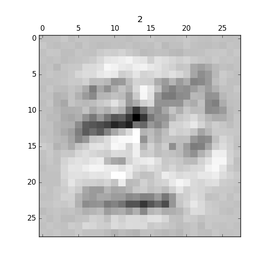

and an imaginary adversarial attack "1" that I printed out and hung over my bed